The MI300X is AMD’s latest and greatest AI GPU flagship, designed to compete with the Nvidia H100 — the upcoming MI325X will take on the H200, with MI350 and MI400 gunning for the Blackwell B200. Chips and Cheese tested AMD’s monster GPU in various low-level and AI benchmarks and found that it often vastly outperforms Nvidia’s H100.

However, before we get started, there are some caveats worth mentioning. Chips and Cheese’s article does not mention what level of tuning was done on the various test systems, and software can have a major impact on performance — Nvidia says it doubled the inference performance of the H100 via software updates since launch, for example. The site also had contact with AMD but apparently not with Nvidia, so there could be some missed settings that could affect results. Chips and Cheese also compared the MI300X primarily to the PCIe version of the H100 in its low-level testing, which is the weakest version of the H100 with the lowest specs.

Caveats and disclaimers aside, Chips and Cheese’s low-level benchmarks reveal that the MI300X, built on AMD’s bleeding edge CDNA 3 architecture, is a good design from a hardware perspective. The chip’s caching performance looks downright impressive, thanks to its combination of four caches in total, including a 32KB L1 cache, 16KB scalar cache, 4MB L2 cache, and a massive 256MB Infinity Cache (which serves as an L3 cache). CDNA 3 is the first architecture to inherit Infinity Cache, which first debuted on RDNA 2 (AMD’s 2nd generation gaming graphics architecture driving the RX 6000 series).

Not only are there four caches for the MI300X GPU cores to play with, but they are also fast. Chips and Cheese’s cache benchmarks show that the MI300X’s cache bandwidth is substantially better than Nvidia’s H100 across all relevant cache levels. L1 cache performance shows the MI300X boasting 1.6x greater bandwidth compared to the H100, 3.49x greater bandwidth from the L2 cache, and 3.12x greater bandwidth from the MI300X’s last level cache, which would be its Infinity Cache.

Even with higher clocks on the SXM version of the H100, we wouldn’t expect these cache results to radically change. But cache bandwidth and latency on its own doesn’t necessarily tell how a GPU will perform in real-world workloads. The RTX 4090 for example has 27% higher LLC bandwidth than the H100 PCIe, but there are plenty of workloads where the H100 would prove far more capable.

Similar advantages are also prevalent in the MI300X’s VRAM and local memory performance (i.e., the scalar cache). The AMD GPU has 2.72X as much local HBM3 memory, with 2.66x more VRAM bandwidth than the H100 PCIe. The only area in the memory tests where the AMD GPU loses is in the memory latency results, where the H100 is 57% faster.

Keep in mind that this is looking at the lowest spec H100 PCIe card that has 80GB of HBM2E. Later versions like the H200 include up to 141GB of HBM3E, with up to 4.8 TB/s of bandwidth. The H100 SXM variant also has substantially faster HBM providing up to 3.35 TB/s of bandwidth, so the use of the 2.0 TB/s card clearly hinders memory bandwidth.

Moving on, raw compute throughput is another category where Chips and Cheese saw the MI300X dominate Nvidia’s H100 GPU. Instruction throughput is ridiculously in favor of the AMD chip. At times the MI300X was 5X faster than the H100, and at worst it was roughly 40% faster. Chips and Cheese’s instruction throughput results take into account INT32, FP32, FP16 and INT8 compute.

It’s also interesting to look a the current and previous generation results from these data center GPUs. The H100 PCIe shows stronger performance in certain workloads, like FP16 FMAs and Adds, but elsewhere it’s only slightly faster than the A100. AMD’s MI300X on the other hand shows universally massive improvements over the previous generation MI210.

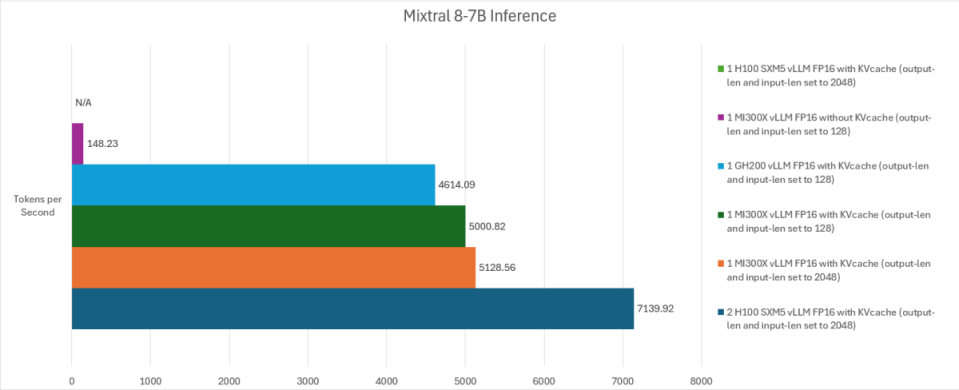

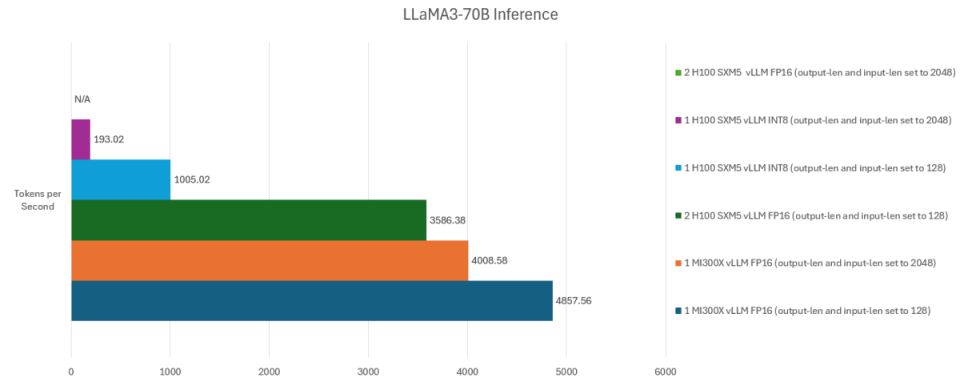

One of the last and likely most important tests Chips and Cheese conducted was AI inference testing, not only with the MI300X and H100 but with GH200 as well (for one of the tests) — and unlike the low-level testing, the Nvidia GPUs in this case are the faster SXM variants. Chips and Cheese’s conducted two tests, using Mixtral 8-7B and LLaMA3-70B. Apparently due to how the servers were rented, the hardware configurations are also a more diverse and inconsistent lot, so not every configuration got tested in each benchmark.

The Mixtral results show how various configuration options can make a big difference — a single H100 80GB card runs out of memory, for example, while the MI300X without KVcache also performs poorly. GH200 does much better, though the MI300X still holds a lead, while two H100 SXM5 GPUs achieve about 40% higher performance. (The two H100 GPUs were necessary to even attempt to run the model at the selected settings.)

Shifting over to the LLaMA3-70B results, we get a different set of hardware. This time even two H100 GPUs failed to run the model due to a lack of memory (with input and output lengths set to 2048 and using FP16). A single H100 with INT8 also performed quite poorly with the same 2048 input/output length setting. Dropping the lengths to 128 improved performance quite a lot, though it was still far behind the MI300X. Two H100 GPUs with input/output lengths of 128 using INT8 finally start to look at least somewhat competitive.

For the MI300X with it’s massive 192GB of memory, it was able to run both 2048 and 128 lengths using FP16, with the latter providing the best result of 4,858. Unfortunately, Nvidia’s H200 wasn’t tested here due to time and server rental constraints. We’d like to have seen it as potentially it would have yielded better results than the H100.

More testing, please!

While the compute and cache performance results show how powerful AMD’s MI300X can be, the AI tests clearly demonstrate that AI-inference tuning can be the difference between a horribly performing product and a class-leading product. The biggest problem we have with many AI performance results in general — not just these Chips and Cheese results — is that it’s often unclear what level of optimizations are present in the software stack and settings for each GPU.

It’s a safe bet that AMD knows a thing or two about improving performance on its GPUs. Likewise, we’d expect Nvidia to have some knowledge about how to improve performance on its hardware. Nvidia fired back at AMD’s MI300X performance claims last year, for example, saying that numbers presented by AMD were clearly suboptimal. And here’s where there are some questions left unanswered.

The introduction to the Chips and Cheese article says, “We would also like to thank Elio from NScale who assisted us with optimizing our LLM runs as well as a few folks from AMD who helped with making sure our results were reproducible on other MI300X systems.” Nvidia did not weigh in on whether the H100 results were reproducible. Even if they could be reproduced, were the tests being run in a less than optimal way?

Hopefully, future testing can also involve Nvidia folks, and ideally both parties can help with any tuning or other questions. And while we’re talking about things we’d like to see, getting Intel’s Ponte Vecchio or Gaudi3 into the testing would be awesome. We’d also like to see the SMX variant of the H100 used for testing, as that’s more directly comparable to the OAM MI300X GPU.

[Note: clamchowder provided additional details on Twitter about the testing and hardware. We’ve reached out to suggest some Nvidia contacts, which they apparently didn’t have because we really do appreciate seeing these sorts of benchmarks and would love to have any questions about testing methodology addressed. And if there’s one thing I’m certain of, it’s that tuning for AI workloads can have a dramatic impact. We’ve also adjusted the wording in several areas of the text to clarify things. —Jarred]

The conclusion begins with “Final Words: Attacking NVIDIA’s Hardware Dominance.” That’s definitely AMD’s intent, and the CDNA 3 architecture and MI300X are a big step in the right direction. Based on these results, there are workloads where MI300X not only competes with an H100 but can claim the performance crown. However, as we’ve seen with so many other benchmarks of data center AI hardware, and as the site itself states, “the devil is in the details.” From using a clearly slower PCIe H100 card for the non-inference tests to a scattershot selection of hardware for inference benchmarks, there’s the potential for missing pieces of information. Chips and Cheese tells us this was due to limited hardware availability, and getting access to this sort of hardware is difficult. In short, we want to see more of these sorts of benchmarks — independent tests — done in a way that lets all the hardware perform to the best of its ability.

The MI300X’s raw cache, bandwidth, and compute results look very good. But these GPUs are also purpose-built for scaleout and large installations, so even if a single MI300X clearly beats a single H100 (or H200, for that matter), that doesn’t say how the picture might change with dozens, hundreds, or even thousands of GPUs working in tandem. The software and ecosystem are also important, and Nvidia has held a lead there with CUDA in the past. Low-level benchmarks of hardware like this can be interesting, and the inference results show what can happen when your GPU doesn’t have enough VRAM for a particular model. However, we suspect this is far from the final word on the AMD MI300X and Nvidia H100.

Signup bonus from